Interpreting compiler results in D365FO using PowerShell

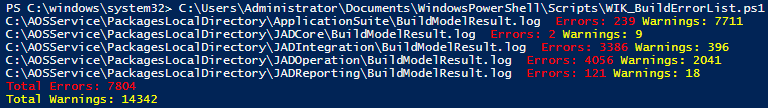

When you build your code, the results are hard to interpret and are being capped at 1000 entries per category within the Visual Studio error pane. The compiler does generate output files with more valuable content within each package. We have written PowerShell code for analyzing and interpreting compiler results of Microsoft Dynamics 365 for Finance and Operations in a more meaningful way.

The BuildModelResult.LOG and XML files have the details of your errors, warnings and tasks. Running the following script parses these files and counts the warnings and errors, to get a better idea of the remaining work during your implementation and upgrade:

$displayErrorsOnly = $false # $true # $false

$rootDirectory = "C:\AOSService\PackagesLocalDirectory"

$results = Get-ChildItem -Path $rootDirectory -Filter BuildModelResult.log -Recurse -Depth 1 -ErrorAction SilentlyContinue -Force

$totalErrors = 0

$totalWarnings = 0

foreach ($result in $results)

{

try

{

$errorText = Select-String -LiteralPath $result.FullName -Pattern ^Errors: | ForEach-Object {$_.Line}

$errorCount = [int]$errorText.Split()[-1]

$totalErrors += $errorCount

$warningText = Select-String -LiteralPath $result.FullName -Pattern ^Warnings: | ForEach-Object {$_.Line}

$warningCount = [int]$warningText.Split()[-1]

$totalWarnings += $warningCount

if ($displayErrorsOnly -eq $true -and $errorCount -eq 0)

{

continue

}

Write-Host "$($result.DirectoryName)\$($result.Name) " -NoNewline

if ($errorCount -gt 0)

{

Write-Host " $errorText" -NoNewline -ForegroundColor Red

}

if ($warningCount -gt 0)

{

Write-Host " $warningText" -ForegroundColor Yellow

}

else

{

Write-Host

}

}

catch

{

Write-Host

Write-Host "Error during processing"

}

}

Write-Host "Total Errors: $totalErrors" -ForegroundColor Red

Write-Host "Total Warnings: $totalWarnings" -ForegroundColor YellowThe compiler results are displayed in the following format as an overview:

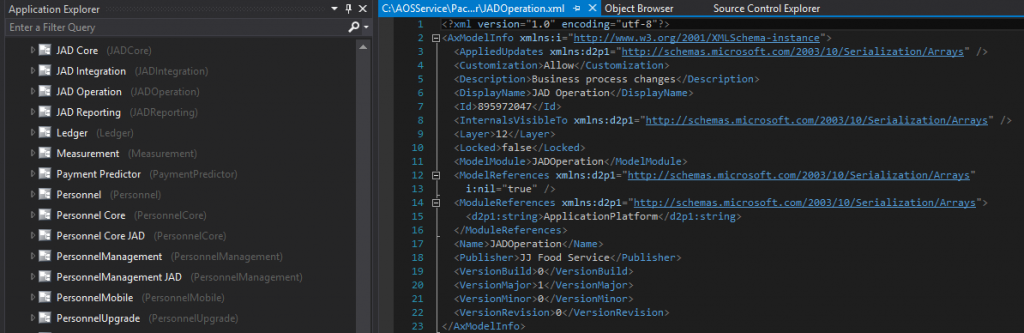

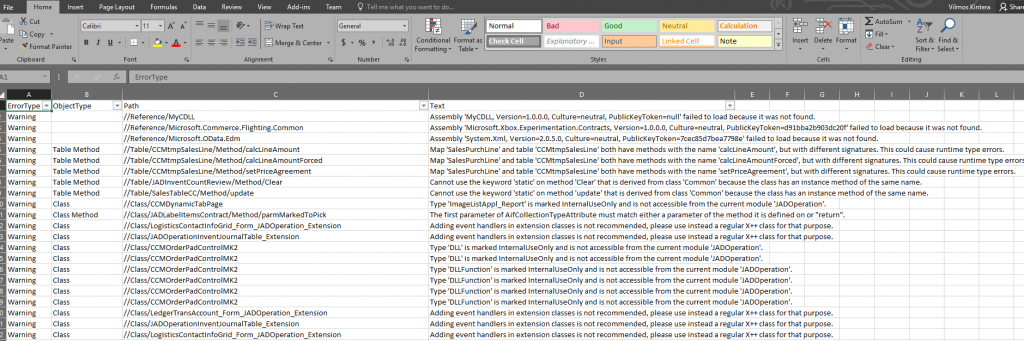

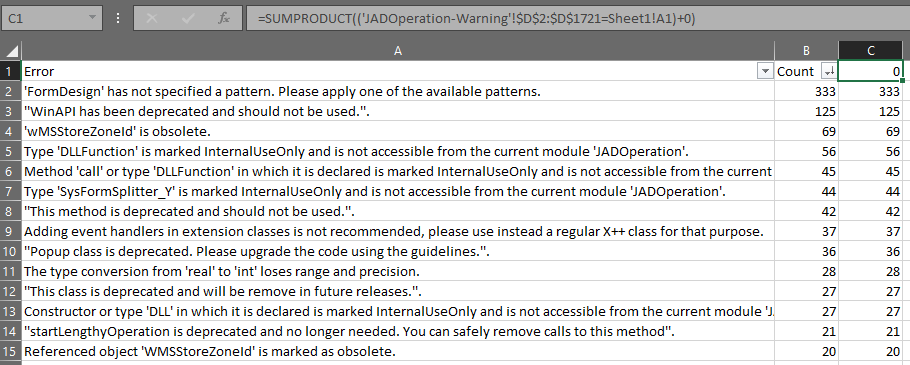

If you want to do a detailed analysis, we also have PowerShell scripts prepared for extracting the errors and saving them in a CSV file for better processing. Opening it with Excel allows you to format them into a table.

We typically copy all error texts to a new sheet, run the duplicate entries removal, then do a count on what is the top ranking error to see if we have any low-hanging fruits to be fixed.

=SUMPRODUCT(('JADOperation-Warning'!$D$2:$D$1721=Sheet1!A2)+0)

You could be very efficient about deciding what things to fix first and next, and is easier to delegate tasks this way.

Source code for all four scripts are available on GitHub.